Top 10 Inventions Of 2010: Popular Science's 'Garage Invention' Awards

'Garage inventors' is a term used to describe individuals or groups of inventors that create independently. They are not on a salary or salary/incentive basis, paid by their companies to invent; they work alone, on their own or in small groups, generally in someone's garage or other part of the home. Popular Science recognizes the accomplishments of these independent inventors yearly in the June issue of its magazine. Here are the 10 winning inventions...

(The following inventions are not ranked; just numbered for convenience.)

1. OneBreath: An Inexpensive Portable Ventilator

OneBreath portable ventilator system with inventor Matthew Callaghan: image via PopSci.com

OneBreath portable ventilator system with inventor Matthew Callaghan: image via PopSci.com Inspired by the need to help more patients in a crisis situation, such as a pandemic, postdoc fellow at Stanford University, Matthey Callaghan developed a no-frills ventilator that runs on a 12 volt battery that works for up to 12 hours and can be easily transported.

Because hospital ventilators typically cost from $3,000 to $40,000, hospitals generally would not have enough ventilators for patients who need them in a pandemic. Callahan and a few fellow students took on the ventilator project so that hospitals would be prepared... just in case. Their device uses a $10 pressure sensor like one you would find in a blood pressure monitor. It pumps air into the chest through the mouth and a sensor monitors how much air is in the lungs. Sensor data is fed into a software program to calculate the data, letting the ventilator know when the patient needs air again.

2. KOR-fx: Ultra Sensation Gaming Device

KOR-fx shown by inventor Shahriar S. Afshar: Photo: John B. Carnett, image via PopSci.com

KOR-fx shown by inventor Shahriar S. Afshar: Photo: John B. Carnett, image via PopSci.comThe KOR-fx is a device that connects to gaming consoles, PCs, or music players. It sits around the shoulders, and the two transducers that lie on one's chest translate stereo sound into stereo vibrations. That way, gamers can feel complete immersion in their games without involving others who are not playing. “We can induce the sensation of rain, wind, weight shift, even G-forces,” he said. His company, Immerz, is in talks with several studios to add these effects to films.

3. SmartSight: A Third Eye For Assault Rifles

SmartSight outfitted rifle, inventor Matthew Hagerty: Photo: John B. Carnett, image via PopSci.com

SmartSight outfitted rifle, inventor Matthew Hagerty: Photo: John B. Carnett, image via PopSci.comThe whole device weighs only three pounds, and though Hagerty says he would like to make the device even lighter, his SmartSight invention, as it is, can save thousands of soldiers' lives from ambushes. Just think about being able to point and shoot a weapon at a target without even physically facing it.

4. EverTune: Guitar Tuning Revolutionized

EverTune, inventors Cosmos Lyles and Paul Dowd: Photo: John B. Carnett, image via PopSci.com

EverTune, inventors Cosmos Lyles and Paul Dowd: Photo: John B. Carnett, image via PopSci.com Lyles and Dowd are in talks with guitar makers to embed EverTune in new guitars, but EverTunes will be made separately to fit many older guitars. I'm just wondering what guitarists will do to buy some extra time between sets now... drink some more water, I suppose.

5. SoundBite: Non-Surgical Bone Conduction Hearing Aid For One-Sided Deafness

SoundBite invented by Amir Abolfathi: Image by Paul Wooten via PopSci.com Hearing aids amplify external sounds for those that have some residual hearing. But when the cochlea (the auditory portion of the inner ear) doesn't function, hearing aids don't do any good. For single-sided cochlea-involved deafness, there is a transplantable titanium device implanted to the base of the skull nicknamed BAHA. But Amir Abolfathi, former Invisalign vice president, came up with a new idea while sitting in traffic one day. (That's when inventors get their best ideas!)

SoundBite invented by Amir Abolfathi: Image by Paul Wooten via PopSci.com Hearing aids amplify external sounds for those that have some residual hearing. But when the cochlea (the auditory portion of the inner ear) doesn't function, hearing aids don't do any good. For single-sided cochlea-involved deafness, there is a transplantable titanium device implanted to the base of the skull nicknamed BAHA. But Amir Abolfathi, former Invisalign vice president, came up with a new idea while sitting in traffic one day. (That's when inventors get their best ideas!)Knowing that teeth are excellent sound conductors to bone, he thought why not create a bone conduction aid from the mouth. With the help of an otolaryngologist, Abolfathi developed the SoundBite, an acrylic tooth insert (a custom-molded retainer) with a receiver that picks up sound from an in-ear microphone and then transmits the sound from the teeth to the bone up the jawline to the cochlea.

In clinical trials, typical reports from patients in tests if the device were that the SoundBite restored 80 to 100 percent of their hearing.

6. Groasis Waterboxx: A Biomimetic Planter

Groasis Waterboxx, inventor Pieter Hoff: Photo: John B. Carnett, image via PopSci.com

Groasis Waterboxx, inventor Pieter Hoff: Photo: John B. Carnett, image via PopSci.com In his past life as a flower exporter, Pieter Hoff often oversaw the evening activities of his lilies. He noticed that the plants collected condensation on their leaves and the water droplets were sucked in by the leaves as they cooled. Mimicking nature's efficient watering system, Hoff developed a planter that could capture water in the same manner to foster sapling trees even in harsh conditions.

The Groasis Waterboxx is designed as a plant incubator, which cools faster than the night air, allowing water to condense and flow into it along with rainwater to keep the plant and its roots hydrated and protected. Hoff's tests of the Waterboxx in the Sahara have been quite successful; after one year of growing saplings in the desert, 88 percent of the trees he planted had green leaves, while 90 percent of those planted in the local method died from the scorching sun. Check out groasis.com and help test these Waterboxxes!

7. Zoggles: Anti-Fog Device

Zoggle inventors Don A Skomsky and Valerie Palfy: Photo: John B. Carnett, image via PopSci.com

Zoggle inventors Don A Skomsky and Valerie Palfy: Photo: John B. Carnett, image via PopSci.comBut Skomsky was able to use an obscure formula to predict when fog would form based on the temperature and humidity, so that the bulky controls could all fit on a chip. The Zoggles now operate with that chip, which calculates when the lens needs to be heated and activates a heater that shuts off when it is no longer needed. Palfy and Skomsky are planning to license their technology to manufacturers of motorcycle helmets, windshields, scuba masks, and military gear.

8. Mini Infuser: Foolproof Programmable, Disposable Infusion Drug Pump

Mini Infuser invented by Mark Banister: Photo: John B. Carnett, image via PopSci.com

Mini Infuser invented by Mark Banister: Photo: John B. Carnett, image via PopSci.comThe Mini Infuser is the only disposable drug pump that can be programmed to dispense drugs continuously. Taped to the patient's chest, a microprocessor inside the pump sends dosage information to the polymer that Bannister developed to deliver the correct dosages. Upon receipt of dosage information, this special polymer will expand and displace the proper dosage from the reservoir within the pump where the drugs are stored.

9. ECO-Auger: Fish-Saving Tidal Energy Turbine

ECO-Auger, invented by W. Scott Anderson: Photo: John B. Carnett, image via PopSci.com

ECO-Auger, invented by W. Scott Anderson: Photo: John B. Carnett, image via PopSci.com Though Anderson had made several small prototypes of the ECO-auger to test function and safety around fish, he has hand-crafted his first large prototype that has a two-foot diameter and a polyurethane/ fiberglass auger. In a test, Anderson said it captured 14 percent of the water's energy, which is not as much as the windmill turbines, but Anderson says the percentage will go up as the diameter of the augers increase. He is sure that ultimately the ECO-Auger will be more cost effective and just as productive as the windmill turbine.

10. RAD Technology: A Drag-Ready Snowmobile

RAD Technology, invented by Shawn Watling: Photo: John B. Carnett, image via PopSci.com

RAD Technology, invented by Shawn Watling: Photo: John B. Carnett, image via PopSci.com Shawn Watling, a self-taught engineer, has created the first rear-drive, adjustable rear suspension snowmobile that is faster, safer, and more efficient than the snowmobiles produced today. Snowmobile racing since he was only 9 months old (presumably as a passenger), the 35 year old Watling decided to put together his own snowmobile out of a scrapped ATV, a 130 horsepower snowmobile motor and transmission to drag race on his local drag strip.

The 'Frankenstein' was fast, and a dynamometer test revealed that 85 percent of its engine power was delivered to the ground, while a typical snowmobile only hit about 55 percent. This result led him to discover that it was the rear suspension on front drive manufactured snowmobiles that increased rolling resistance and prevented adequate track tension.

Since Frankenstein, Watling's rear-drive prototypes have been numerous, but five years later he has made corrections in everything that slows a snowmobile down, and his RAD (rear-axle-drive) Technology has also produced a safer snowmobile that's more fuel efficient.

Laptop Buyer's Guide

One cannot make an intelligent buying decision with out first knowing what parts & features together constitute a notebook. Once we know these components, we can expand our knowledge into specifying which parts and features must necessarily be in the notebook and which we can compromise or forego, given the cost and resulting benefits of each decision.

When I said specifying it means both made(or built) to order notebook that is shipped out to the consumer in a week or two or readybuilt notebook that one can buy off the shelf of a retail vendor. Both Lenovo and Dell permit the consumer to build a notebook as per user specifications. In this case it is assumed that the buyer knows what he wants in his(her) notebook. Even if one buys a notebook off the shelf it helps to know what comes with the system in exchange for his(her) hard earned money.

One can not imagine a situation where a blindfolded customer randomly pointing his finger at one notebook (that one!) among so many on display at a retail shop, buys it and goes away. Even if you are in a computer shop with your eyes wide open, it would make no difference if you don’t have some basic understanding of what a notebook is (or does).

Let me give you an example. Suppose you have decided to buy an Lenovo(IBM) Thinkpad notebook because you overheard someone mention in their conversation! (I chose Lenovo(IBM) brand for its many virtues and excellent online documentation is one of them) And you also saw this ad on Lenovo’s home page and are impelled to buy it immediately and decided that it is a no brainer. What can go wrong with this decision? You say to yourself, “Look at the shiny black notebook with a glowing image on its screen! I cannot wait to tap on its beautiful keyboard”.

|

Congratulations, you made the decision. But which one out of the many Thinkpads is going to give you this much saving. And do you need all that it offers?

If see the image below you can see several different combinations in which just this one model (Thinkpad) of one particular brand Lenovo can come.

|

But which one? A bewildering array of possibilities, isn’t it? We haven’t even talked about price yet. Some how you arrive at a configuration shown below and it costs you $ 1275/- and goes up from there with every upgrade.

You are ready to click on “Add to cart“button and pay through credit card. But wait. Suddenly you remember some flyers dropped at your home from Futureshop and Staples that give you details about some special offers on notebooks . At this point you make a price comparison. Ofcourse the flyers talk about different models and brand names. As an example see the one below:

While you need only to compare apples to apples, you see for much less money you get your laptop with an extra all-in-one printer thrown in for free in this Futureshop special offer!

You think this is a better deal and you are getting value for money. So you change your mind. But are you sure? Do you see the complexity now?

To conclude, no deal is good or bad by themselves. It has to be seen with in the context and the context is what you intend to do with the laptop and what comes with the laptop and whether it can serve my needs. While you may have a good idea about what you intend to do with it, you should be clear what goes to make a laptop.

|  |

The battery though visible (close the laptop and turn it over to see the bottom) as shown below, it is not something that we interact with while working. Mobility is a prime characteristic of a laptop and battery plays a major part in it. Imagine, looking for a looking for a wall socket to connect to, at the airport lounge because your battery is down and you have important messages to check before your flight. So it pays to look closely at the specifications of the battery that comes with the laptop and to know if it is possible to order higher capacity battery with the laptop instead of the standard that comes with it.

| As for the rest, like processor, system memory, hard drive etc while not visible but work silently behind; to deliver the results desired. Just for completion of this discussion a blown up diagram of a laptop is shown on the left and you could see that the processor, system board, system memory, hard drive etc. are safely encased within the shell and are out of sight. While you may not come into physical contact with these components for everyday use, a careful selection of these in the notebook is a must for the optimum performance. |  |

It is not my intention to scare you with too many technicalities of laptop parts in your selection. You can skip this section if you want to. But it helps to know more and this additional knowledge puts you in control in the choice of a laptop that is a closer match to your needs.

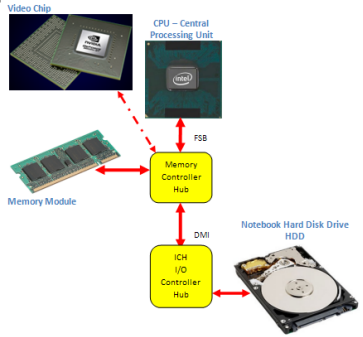

When you click on an icon on the desktop to start an application, the processor pulls the necessary instructions (remember all applications are programs, which are in turn, a set of instructions) from the hard disk drive (HDD), stores them temporarily in the RAM memory. From the RAM memory, a select set of data is pulled and temporarily stored in another location (closer to CPU) called the cache memory. CPU executes the instruction set in the cache memory in an order as dictated by the programs themselves. The result of the execution of these instructions is what we see on the screen. Diagrammatically the path of data travel from the hard disk drive(HDD) to the CPU is shown as follows:

Hard Disk Drive (HDD)-> RAM Memory -> Memory Controller (MCH) -> cache memory -> CPU

This long path is necessitated by the fact that these components operate at different speeds. In relative terms, CPU is the fastest and the hard disk drive (HDD) being the slowest. The speed of RAM memory and cache memory falling in between the two, with cache memory being much faster than the RAM memory.

This long path is necessitated by the fact that these components operate at different speeds. In relative terms, CPU is the fastest and the hard disk drive (HDD) being the slowest. The speed of RAM memory and cache memory falling in between the two, with cache memory being much faster than the RAM memory. Any parameter of these components that speeds up the data travel in this path improves our experience with the laptop.

In earlier models of Intel processors, the cache memory is part of the CPU itself while the memory controller (MCH) stays outside the cpu in the form an additional chip. But later models of Intel processors like Core i3,i5,i7 have all built-in memory controllers.

In AMD processors, the memory controller (MCH), cache memory and the CPU are all packaged together as a single chip aka CPU.

When the memory controller is embedded in the CPU, the memory is directly connected to the CPU bypassing the motherboard chipset thereby enhancing the performance.

With this framework in the background, let us go over some of the parameters of the following components:

- Processor (CPU):

- Clock Speed: Measured in billions of cycles/sec or GHz. (Please note: 1 cycle/sec = 1 Hz and the term Giga stands for the quantity billion and hence the term GigaHertz or GHz for short) Higher the clock speed, greater is the speed with which the instructions are executed and hence greater is the performance

- FrontSideBus (FSB): Measured in million of cycles/sec or MHz. (Again the term Mega stands for the quantity million and the term MegaHertz or Mhz for short) Greater the FSB, faster will be the data travel to the CPU, and hence greater is the performance

- Number of Cores: A CPU with 4 cores is known as QUAD CPU, while a CPU with 2 cores is known as Duo CPU and a CPU with a single core is known as Solo CPU. Theoretically more the number of cores faster will be the processing of instructions as the processing will be shared between the number of cores available. Imagine 4 waitresses(Quad Core) waiting to take your order at the restaurant table vs. that one waitress(Solo) who has disappeared in the kitchen with your order and you are left wondering what happened.

- Number of bits: It matters whether it is a 64 bit processor or a 32 bit processor. Earlier models of cpu used to be 32 bit processors. Lately 64 bit processors have become the norm.

- Cache Memory: Measured in MegaByte or MB for short. Greater the size of the cache memory, less is the necessity for the CPU to reach the RAM memory for additional data. Hence faster will be the processing.

- RAM Memory:

- Size of memory: Measured in GigaByte or GB for short. Greater the size of the memory, more data, and hence more applications can be stored in the RAM for CPU to access. Hence better will be the performance in a multitasking environment.

- Technology adopted for data transfer from the RAM to the CPU: DDR vs. DDR2 vs. DDR3. DDR2 allows for higher clock speed compared to DDR and hence more gets done in a given time. The newer DDR3 technology offers nearly twice the bandwidth of DDR2 and hence is suited for graphics-rich applications. It allows for lower power consumption too and hence makes possible longer battery life.

- Clock speed: Higher the clock speed better the performance. Given the technology choose the memory module with the greater clock speed.

- Hard disk drive (HDD):

- Interface of hard drive with the motherboard: Whether it is Serial ATA(SATA) or Parallel ATA (PATA) interface that is adopted in the laptop. PATA is slower compared to SATA in terms of data transfer and PATA technology is slowly being phased out. Choose SATA over PATA always.

- The technology behind hard drive itself: Whether it is driven by conventional mechanical means or by the emerging Solid State Drive (SSD) technology. SSD is considerably faster than mechanical drive for data transfer (notably for random access) and less power intensive. By being faster it increase the data transfer rate to the CPU and by being less power intensive makes the laptop battery last longer and you can use the laptop hours on end without having to look for a wall socket. Such advantages come at a price. SSDs are more expensive compared to conventional mechanical hard disk drives.

- Speed of rotation: This is applicable for conventional mechanical hard disk drives only. Higher the speed of rotation, greater is the rate at which the data will be accessed for read or write. Go for a laptop with a hard drive that is running faster if you can afford the price difference.

I would like to tell you more about the Screen and Display adapter which also have a say in the overall experience you get out of your laptop. But let’s keep it for another day.

With the details seen this page and the previous page, you are ready to move on to Laptop key features and some additional parameters in your purchasing decision.

The following table gives in a nutshell the key features, their relative importance and some pertinent remarks about each feature that would help in the buying decision.

| Feature | Importance | Remarks |

|---|---|---|

| Processor | Important | Buyers would do well to do some additional research before committing themselves to a particular processor in a notebook. Because once a processor is selected not much can be done by way of an upgrade and are consigned to live with their selection. However the following set of guidelines would help one to start with the selection:

|

| Feature | Importance | Remarks |

|---|---|---|

| Sytsem Memory | Important | Windows Vista needs lots of memory to run and if more applications are run simultaneously more is the need for memory. Higher the amount of memory in the system, and faster it is, better is the performance. Bare minimum is 512MB, 1GB is adequate and anything higher is better. It will be helpful to have empty memory slots for future expansion. For example, while the amount of memory in 2 sticks of 512MB memory module is equal to a single stick of 1GB memory module, it is preferable to buy a laptop, with the latter configuration as it leaves room for expansion. In terms of speed, if two laptops come with the same amount of memory say 1GB, but one is running at 667Mhz while the other at 1066Mhz, it is preferable to buy the latter. |

| Screen Size | Important | Bigger the screen size, better it is. A bigger screen helps to see more of the details of a document or image on the screen. Higher the resolution, better it is. If a given screen size say 15.4″ is offered in two resolutions 1280 x 800 or 1680 x 1050, it is better to choose the latter. It helps to see the document / image crisply. |

| Communication Ports | Important | By its very nature(mobility) a laptop is expected to connect effortlessly to the available network. At a minimum it should have built-in wireless that supports 802.11 B/G standard. It is even better, if it supports the newer 802.11 N standard, as it makes for faster data transfer and covers a wider range for wireless connection. It is not a big deal if it supports the less popular 802.11 A apart from B/G. Support for 10/100 integrated Ethernet LAN, V.92 56K Data/Fax Modem is a must and comes with all the laptops. Some models come with 10/100/1000 integrated Ethernet LAN support which is better, as it helps to transfer data at gigabit rate. An integrated webcam is very helpful for those business customers interested in teleconferencing, or for those casual customers interested in chatting over the internet with their friends and families. It does away with unnecessary cables which look clumsy if one decides to plug-in external web camera at a later day not to mention carrying additional equipments from place to place. An integrated microphone for Internet chat is a definite convenience for the above needs and reasons. Support for Bluetooth is welcome but not essential. If one decides to have it, it better to go with the latest version (V2.0). |

| Hard Drive Size | Less Important |

|

| Optical Drive | Less Important | Laptop configurations with DVDRW are preferred over DVD Rom/ CDRW combo. Buyers interested in watching high definition video, are advised to check if the notebook supports Blu-Ray format drives. |

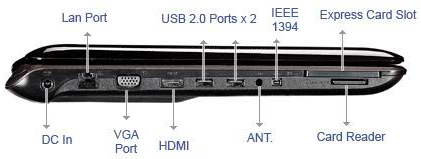

| Other ports | Less Important | A minimum of 2 USB2.0 ports are essential in a laptop, anything greater is better. Most of the peripherals (printers, camera, scanners) support USB2.0 standard. PC card slots are helpful in many ways, like inserting a serial port (which is a phased out standard but is finding newer applications like connecting to a satellite receiver) or a IEEE 1394 Firewire port (for firewire devices like a video camera) or an adapter with USB2.0 ports(should you need more) Newer laptops come with an additional express card slot apart from the PC card slots.The major benefit of an express card slot is the increased bandwidth for data transfer that they offer vs. PC card or USB2.0 interface. Express card can transfer data at 2.50Gbits/sec vs. 1.04Gbits/sec for PC Card and 480Mbits/sec for USB2.0. It is absolutely convenient to have slots for memory cards / flash cards that support as many formats as possible. |

| Warranty Support | Less Important | Some models come with 1 year parts & labor, while some come with 2 year parts and labor, while some high end models come with 3 years part and labor warranties. Here there is a tradeoff between cost and peace of mind (to know that the laptop is covered for so many years). In my experience, lately laptops are prone to failure, especially the low end laptops and those that compete on price. So my recommendation is to go for as many years of warranty coverage as one’s budjet can afford. Also it is preferable to go for those notebooks that are covered under international warranties. Should one expects to travel often and is away from the home country from which they are bought, this will come in handy. |

| Bundled Software | Not so important | Barring the Operating System software that comes with the Laptop, other software are not so important. This is because either they are trial versions of popular software which lasts only 2 to 3 months from the date of purchase (after which period one needs to pay for the full version) or they are some utilities that the manufacturer provides anyway. |

| Price | Less Important | By now you are convinced that price is not that important a factor in the buying decision. You will agree with me that what matters most is the specifications of the laptop, its reliability, its ease of use, warranty support etc. In short whether they will meet your needs. However it is wise to shop around and look for the best deal for a notebook that you have selected after careful consideration. Also it is helpful to have a Plan B( an alternate model from the same brand with almost similar specs or alternate brand with the exact specs) if your chosen model is out of stock or phased out. |

In the previous discussion we focussed exclusively on hardware and very little on software. Especially we left out the operating system software which make all the hardware work together. Given its importance it deserves a separate page for discussion.

As of September 2010, as per Market Share data 91.08% of the users prefer some version of Windows Operating System, followed by 5.03% of users who prefer Mac OS and a miniscule 3.89% make the rest of the operating systems which include Linux.

So what this means to you, the potential laptop buyer?

It means, it is safe to choose Windows OS as your preferred operating system for your notebook. If you see the following reasons you will agree with me.

- There is a very vast pool of Windows users all over the world, Microsoft is getting a constant feedback from its users about possible improvements, the inherent problems of the Windows OS and the threats to their system experienced by the users through the Internet. Hence it is constantly bringing upgrades and updates to their products to maintain its market leadership. With constant updateds, you can be reasonably sure that your laptop runs stable over the course of its life.

- Also as the majority of your applications are written for Windows OS, you can be rest assured that all your appllications are well supported.

- In all likelihood all your peripherals have drivers written for Windows, so hassle free you can connect your peripherals to your new laptop and extend its functionality.

- Finally Windows OS is easy to use.

One concern that some users have is whether their existing 32 Bit applications will run well in a 64 Bit environment. The answer is that they should. Only in rare occasions, where you have legacy external hardware devices for which the driver software is 32-Bit compatible, will you have problem connecting them to 64 Bit laptop. In such cases you may have to look for 64-Bit drivers for such devices, download, install and then connect them.

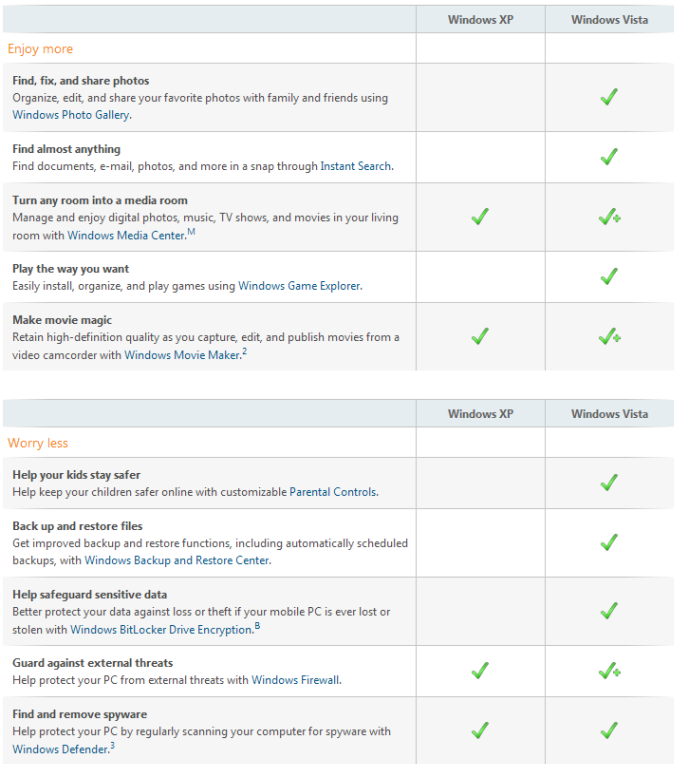

If you are with me so far, the next question is which version of Windows should you choose?

In simple terms this is what I would suggest:

Choose Windows XP Professional OS in the following situations:

- If you are currently running an older application in your other computers that are only compatible with Windows XP

- If any of your existing peripherals that have drivers that are compatible only with Windows XP

- If your laptop is not so richly endowed with hardware like bigger memory, higher screen resolution, a very fast processor

- If you have just bought an older laptop at bargain price with an older battery or a battery with low capacity and still want to make the best use of it

- If you are like me, a little conservative, playing it safe and waiting until others have given their verdict about a better OS from Microsoft than Windows XP

- If all of your existing applications in other computers are compatible with Windows Vista

- If your existing perpherals have latest drivers supported by Vista which you can easily download and install

- If your laptop is rich in specifications that boasts a very fast processor, a screen that can support high resolution, huge memory

- If it comes with the latest battery with an impressive running time

- If you are a bit adventurous and would like to have the best experience of what the latest technology can offer

The ‘+’ sign beside the check mark indicates this Windows XP feature is improved in Windows Vista.

Having gone through the above table, if you have chosen Windows Vista as the OS for your laptop, then you are to further narrow down your choice from among four editions of Windows Vista (Source: www.microsoft.com) depending on which features you need in your laptop :

It should be noted that while Windows OS software is popular, it is proprietary from Microsoft and hence comes with a pay per use basis. As seen by the retail price, the Vista Home Basic version comes cheaper with lot less features while the Vista Ultimate comes with all the features but at a steep price. Please note those maximum suggested retail price only and when bundled with the laptop it should be a lot cheaper.

If you are on a tight budget and can’t afford to spend on an OS, then you may want to consider those laptops that come with Linux (Open Source) Operating System. Being open source software, it is free and your whole package becomes a lot cheaper. However you may want to be informed about which version of Linux comes preinstalled on the laptop, whether it is user friendly etc. before making a decision.

A word caution here: it is better to confirm if the OS (proprietary/open source) would support all the applications/ all the hardware that you are currently using or intend to use in the near future, before committing yourself.

A letter written by a Father to his Children

Hi,

Before some days, I got mail with above title. I am very impressed by message and so sharing with you.

——————————————————————————————————————–

My Dear Child,

I am writing this to you because of 3 reasons:

1. Life, fortune and mishaps are unpredictable; nobody knows how long they live! Some words are better said early!

2. I am your father, and if I don’t tell you these, no one else will!

3. What is written is from my own personal bitter experiences that perhaps could save you a lot of unnecessary heartaches.

Remember the following as you go through life:

1. Do not bear grudge towards those who are not good to you. No one has the responsibility of treating you well, except your mother and I. To those who are good to you, you have to treasure it and be thankful, and ALSO you have to be cautious, because, everyone has a motive for every move. When a person is good to you, it does not mean he / she really likes you. You have to be careful; don’t hastily regard him / her as a real friend.

2. No one is indispensable, nothing in the world that you must possess. Once you understand this idea, it would be easier for you to go through life when people around you don’t want you anymore, or when you lose what / who you love most.

3. Life is short. When you waste your life today, tomorrow you would find that life is leaving you. The earlier you treasure your life, the better you enjoy life.

4. Love is but a transient feeling, and this feeling would fade with time and with one’s mood. If your so called loved one leaves you, be patient, time will wash away your aches and sadness. Don’t over exaggerate the beauty and sweetness of love, and don’t over exaggerate the sadness of falling out of love.

5. A lot of successful people did not receive good education that does not mean that you can be successful by not studying hard!

Whatever knowledge you gain is your weapon in life. One can go from rags to riches, but one has to start from some rags!

6. I do not expect you to financially support me when I am old, either would I financially support your whole life. My responsibility as a supporter ends when you are grown up. After that, you decide whether you want to travel in a public transport or in your limousine, whether rich or poor.

7. You honor your words, but don’t expect others to be so. You can be good to people, but don’t expect people to be good to you.

If you don’t understand this, you would end up with unnecessary troubles!

8. I have bought lotteries for umpteen years, but I never strike any prize. That shows if you want to be rich, you have to work hard!

There is no free lunch!

9. No matter how much time I have with you, let’s treasure the time we have together. We do not know if we would meet again in our next life!

Your Dad

A Guide to Capacitors: Electrolytic Capacitor, Ceramic Capacitor and others

What is a capacitor and how does it work? When you go to a showroom and watch some plasma panels maybe you do not know that you are watching some capacitors. Yes, a plasma panel can be considered a capacitor.

The capacitor is a device able to store electric energy. Practically every time two conductor materials (called "plates") will be near and separate from a non conductor material we will have a capacitor. In a PDP (Plasma Display Panel) the plates are the two glasses (front and rear panel) and the non conductor material is the dielectric material that is between them. If we apply a voltage to a capacitor, it will charge at the same power supply potential. In a capacitor the process of storing energy is called "charging" and it involves electric charges of equal magnitude but opposite polarity. Initially, at flat capacitor, the plates are electrically neutral since we have the same numbers of electrons and protons on them.

Flat capacitor

Capacitor charging process

Voltage progress for a charging capacitor - V(t) = V0 [1- e–(t/RC)]

Current progress for a charging capacitor - I(t) = I0 e–(t/RC)

Capacitor discharging process

Q = Q0 e–t/RC

where Q is the capacitor charge (Coulomb), Q0 is the charge at the start, "e" is the exponential number (Euler's number =2.718..), t is the time (Seconds), C is the capacitance (Farad), R the resistance (Ohm).

For voltage and current the equation becomes:

Current progress for a discharging capacitor - I = I0 e–t/RC

The capacitor charge or discharge happens in a time depending from the resistance value (in a series to the capacitor) and from the capacitance value of the capacitor. Laboratory tests have shown that the needed time to charge the capacitor at 63% of the applied voltage is equal to the product result between resistance and capacitance. The product result is called time constant (t), so

t = R * C,

where t is expressed in Seconds, R in Ohm and C in Farad.

Moreover it has been demonstrated that the capacitor is charged in a time T = 5 t because after the first t it charges 63% of the applied voltage and after every other t it charges a further 63%, but of the remaining difference.

The aptitude at the electric energy storing is called capacitance: it is directly proportional to the one plate surface (A) and inversely proportional to their distance (d) and depends, in directly proportional manner, from the relative static permittivity value of the used insulator εr. The formula is

C = εr * ε0 * A/d

where ε0 is the vacuum permittivity, the measure unit is the Farad (F).

The insulator placed between the plates is called dielectric and it can be liquid, solid or gaseous. The dielectric type allows a first capacitors classification. The most used capacitors, in the electronic area, are the ones with air or solid dielectric. The most used types of solid dielectric are: mica, ceramic, plastic film, paper. The capacitance value of a capacitor is clearly showed on the capacitor body (for the big ones) or codified by different codes (colours or alphanumeric). Now let's have a look to some capacitor types, at their features and application areas.

Electrolytic capacitors

The electrolytic capacitors are formed from two metallic sheets, cylindrically wrapped, that are separated by a thin oxide layer (got through an electrolytic process). The very thin layer thickness (approx. 0,001 µm) and its relative static permittivity value, relatively high, allow to get huge capacitances values (until 1.000.000 of µFarad in the aluminium electrolytic capacitors) even if they can suffer a potential difference of a few ten volts only. Due to their structure they are polarized, that is they must observe a polarity verse: one plate must be always positive, the other one must be always negative. Changing the polarity direction is very dangerous: the capacitor could explode.

As we said above they have big capacities, so they can accumulate a large energy quantity. For this reason they are used, mainly, in the power supply units, for the voltage levelling and for the ripple reduction.

Ceramic capacitors

The ceramic capacitors are constituted from a sandwich of conductor sheets alternated with ceramic material. In these capacitors the dielectric material is a ceramic agglomerate whose relative static permittivity value can be changed from 10 to 10.000 by dedicated compositions. The ceramic capacitors, with low relative static permittivity value, have a stable capacitive value and very low losses, so they are preferred in the floating and high precision circuits. The ones with high relative static permittivity value allow to get high capacities occupying a small space. Generally the ceramic capacitors have small dimensions and they are preferred in the high frequencies area. The most used ceramic capacitor shape is the disc one, that is a little ceramic disc metalized on both sides and with the extremities welded on them. Typically they have very small capacities, from some pF to some nF, and they can suffer big potential differences.

Paper capacitors

In the paper capacitors the dielectric material is constituted from a special paper saturated with a fluid or viscous substance. To increase the insulation, in these capacitors, often two or more layers are coupled. The finished envelopment is again saturated under vacuum with insulating oil or is dipped in the resin. Generally they are used as filter capacitors.

Plastic film capacitors

The membranes in plastic film can be produced with lower thickness than the saturated paper and are more uniform. So there are capacitors that use these membranes as dielectric material (a few µm of thickness only) and they can suffer high voltages. The plastic film capacitors are mainly used in the transistor circuits. In the polyester capacitors a metallic sheet is used as electro-conductor layer or the metal can be deposited directly on the film by under vacuum vaporization, with a layer thickness of 0,02 - 0,05 µm. The capacitance of these capacitor can reach some µF. They are used in the low frequency circuits mainly.

Tantalum capacitors

The tantalum capacitors, as the electrolytic ones, are polarized, but they have the tantalum pentoxide as dielectric material. Compared to the electrolytic ones, they are better both the temperature stability and high frequencies, but they cannot suffer over-voltage peaks and can be damaged, sometimes exploding with violence. On the other hand they are more expensive and they have much lower capacity.

Niobium capacitors

The tantalum capacitors have two drawbacks: the tantalum cost due to this material rarity and its susceptibility to certain low level ppm of thermal runaway failures. Because of the increasing demand for tantalum capacitors a new technology has been developed and the niobium capacitors have been launched into the market. With at least 100 times more deposits than tantalum, the niobium guarantees good availability and lower price. So the niobium capacitors are very similar to the tantalum ones, but they have low cost, surge robustness and it is raising the conviction they can have better performances in other fields like voltage range, ESR and miniaturisation.

GPS receiver design

GNSS (Global Navigation Satellite System) is a common name for all of the satellite based positioning systems, which are GPS (Global Positioning System) from US, Galileo from EU, GLONASS from Russia, and CNSS (Compass Navigation Satellite System) from China. GPS is the first and most popular one among these systems.

In US, FCC ordered all the network operators to follow E911 act(Enhanced 911) to offer the physical address of calling party who is calling 911 emergency call. More and more cellular phones have armed with GPS feature. In the same time, the navigation devices are popular due to fast growing of private cars and mobile phones in the emerging countries. Thanks to Google, more and more consumers can easily connect their GPS devices to the Google web services for navigation, virtual sight viewing or satisfying their curiosity. All of these services are available free of charge.

Google's Inspiration

Google is a great web innovator. Everybody knows about Google Map and Google Earth. And its competitors like Microsoft and Yahoo have to catch up. However Google is not the inventor of the web GIS. Actually web GIS has been available for a long time. Never the less, Google promotes the web GIS with its great influence in the Internet, and furthermore deliveries the free services in a quick and elegant way (AJAX). More and more companies and developers have identified the business opportunities by integrating the existing navigation technologies and web GIS. The new successful stories spread the world and gain the attentions of the venture capitals. As a result, the GPS ecosystem becomes highly competitive and exciting.

Google is a great web innovator. Everybody knows about Google Map and Google Earth. And its competitors like Microsoft and Yahoo have to catch up. However Google is not the inventor of the web GIS. Actually web GIS has been available for a long time. Never the less, Google promotes the web GIS with its great influence in the Internet, and furthermore deliveries the free services in a quick and elegant way (AJAX). More and more companies and developers have identified the business opportunities by integrating the existing navigation technologies and web GIS. The new successful stories spread the world and gain the attentions of the venture capitals. As a result, the GPS ecosystem becomes highly competitive and exciting.

Competitive Market

A successful GPS application is made up of GPS terminals, map data services and service centers. That means the GPS applications are blending businesses involve Internet, mobile terminal, mobile network, automotives, and consumer electronics. More and more industries are looking for the new business opportunities in the navigation and location aware services via merging and acquisitions. It is a clear trend that the map data and services are the key factors of a business success. As usual, the silicon suppliers and device manufacturers have to fight for the market share and making devices cheaper. The startup companies must release products with unique features. Some suppliers offer dual mode or tri-mode satellite positioning chipsets for GPS, Galileo and CNSS. Some independent RFIC vendors team up with the software suppliers to promote the software GPS solutions in reduced BOM cost. Some other vendors are promoting the one chip RFIC for all RF features including Bluetooth, FM radio and GPS.

GPS Receiver Architecture

GPS works by making one way range measurements from the receiver to the satellites. In order to arrive at a position fix we must know precisely where the satellites are and how far we are from them. These data are available to the receiver by reading the data message from each satellite which provides a precise description of the satellite orbit and timing information which is used to determine when the signal was transmitted by the satellite. Each satellite transmits on 2 frequencies in the L band (L1=1575.42 MHz and L2=1227.6 MHz). Each satellite transmits a unique CDMA (Code Division Multiple Access) code on these frequencies. On top of this the signal is modulated with a 50 Hz data message which provides precise timing information and orbital parameters. Since the receiver knows which sequence is assigned to each satellite it knows what satellite the data is coming from. The receiver creates a copy of the sequence and correlates or integrates the received signal multiplied by this copy over a period of time (in our case 1 ms). The particular sequence transmitted by each satellite has been chosen to reduce the chance that a receiver will track a satellite transmitting a different PRN sequence. For more detail on correlators see the Zarlink chipset documentation or some of the other references.

The attached figure is a traditional GPS receiver architect (from Zarlink). It is made up of antenna, RF/IF section and a base band processing unit, which usually has correlators and an embedded processor. The host processor talks to the embedded processor in an industrial standard protocol called NMEA (National Marine Electronics Association) or optional proprietary protocols. The physical links between the processors might be a standard UART, USB or Bluetooth. The communication over USB and Bluetooth has to simulate a virtual serial port to talk with high level application software. The default baud rate of NMEA is 4800bps, the higher rate doesn't make sense.

The attached figure is a traditional GPS receiver architect (from Zarlink). It is made up of antenna, RF/IF section and a base band processing unit, which usually has correlators and an embedded processor. The host processor talks to the embedded processor in an industrial standard protocol called NMEA (National Marine Electronics Association) or optional proprietary protocols. The physical links between the processors might be a standard UART, USB or Bluetooth. The communication over USB and Bluetooth has to simulate a virtual serial port to talk with high level application software. The default baud rate of NMEA is 4800bps, the higher rate doesn't make sense.

Antenna

Because of miniaturization and multi-functional requirements, the designer faces to more and more challenges in antenna design. These factors include human interference, noises from embedded processors and external interferences. It is better to copy the reference design from the application notes for an inexperienced engineer. Never the less, GPS antenna design is still easier than the mobile phone antenna design. We know, the latest mobile phone has to work on 800MHz, 900MHz and 1800MHz with PA and faces to the big noises inside.

Because of miniaturization and multi-functional requirements, the designer faces to more and more challenges in antenna design. These factors include human interference, noises from embedded processors and external interferences. It is better to copy the reference design from the application notes for an inexperienced engineer. Never the less, GPS antenna design is still easier than the mobile phone antenna design. We know, the latest mobile phone has to work on 800MHz, 900MHz and 1800MHz with PA and faces to the big noises inside.

The most commonly used antennas in GPS are the Helix and the patch antenna. Patch antenna has strong direction selectivity, which is used in most of the external GPS mice. The Helix antenna is much suitable for handheld GPS, which offers broader antenna angle, and it works better than patch antenna when it is close to human.

There are some off-the-shelf antennas available in the market. Most of them are external antennas, which offer better performance.

Sarantel offers GPS antenna in full Balun design, which offers 360 degree antenna reception and highly frequency selectivity, and most of the noises can be eliminated. This company also offers the bulk ceramic antenna as the smallest antenna in the world.

Mr. Mark Kesauer offers an inexpensive external GPS antenna design on Circuit Cellar. The PDF document is available on here. This design uses commonly available components and materials.

RFIC

The RF parts of a GPS from different suppliers are slightly different but most of these ICs are sharing same concept. The RF section includes LNA, filter, PLL and BPSK demodulator. Maxim’s MAX2769 demonstrates the general RF IC for GPS receiver.

The RF front-end of a GPS receiver first amplifies the weak incoming signal with a low-noise amplifier (LNA), and then downconverts the signal to a low intermediate frequency (IF) of approximately 4MHz. This downconversion is accomplished by mixing the input RF signal with the local oscillator signal using one or two mixers. The resulting analog IF signal is converted to a digital IF signal by the analog-to-digital converter (ADC).

The MAX2769 integrates all these functions (LNA, mixer, and ADC), thus significantly reducing the development time for applications. The device offers a choice of two LNAs: one LNA features a very-low, 0.9dB noise figure, 19dB of gain, and -1dBm IP3, for use with passive antennas; the other LNA has a 1.5dB noise figure with slightly lower gain and power consumption, and a slightly higher IP3, for use with an active antenna.

There is a provision for external filtering at RF after the amplifier. The signal is then downconverted directly using the integrated 20-bit, sigma-delta, fractional-N frequency synthesizer together with a 15-bit integer divider to achieve virtually any desired IF between zero and 12MHz. A wide selection of possible IF filtering choices accommodates different schemes, such as those of Galileo.

The overall gain from RF input to IF output can be tuned or automatically controlled over a 60dB to 115dB range. The output can be chosen as analog, CMOS, or limited differential. The internal ADC has a selectable output of one to three bits. The integrated reference oscillator enables operation with either a crystal or a temperature-compensated crystal oscillator (TCXO), and any input reference frequency from 8MHz to 44MHz can be used.

Correlators

The correlators of GPS are the essential parts of the whole system of bit synchronization and decoding. The correlators will feed the raw digit output to the embedded processor to acquire, confirm, pull-in, track the satellites, and translate into NMEA protocol, which the host controller can understand.

The correlators can be implemented in hardware and software. Recently, the number of correlators increases dramatically. The early product from Zarlink has 12 channel correlators. The newer SiRF-II has 1920 correlators inside, and the latest SiRF-III has over equivalent 200K correlators to reduce the TTFF. MediaTek (MTK) Taiwan also released a low cost GPS chip, which has 32 channel correlators inside. I use a MTK based GPS for my own testing purpose. It works fine, although I still expect more accuracy from the device. However, its performance is good enough as a consumer class GPS receiver.

Some open source projects released the FPGA based correlators. On the other hand, the correlators can be implemented in a FFT based software algorithm, which is referred as software GPS and cited in an application note from Maxim.

Embedded Processor

The silicon suppliers are trying to promote their own platforms in GPS. The task for the embedded processor is calculation and tracking the different satellites and interfacing with host processor in NMEA. If you check the attached NMEA document, you will realize that the embedded processor has to deal with so many parameters in detail. The requirements for the embedded processors are big enough memory address space and sufficient processing power for intensive calculation. The ARM7TDMI is a 32bit core, which offers sufficient memory space and processing power. The peripherals included UART, USB and Bluetooth have been available for ARM for a long time. As a result, the latest GPS chips from different suppliers have the identical trend to select ARM7TDMI as the embedded processor.

There are some key KPIs for the GPS, which might be related to the correlators and software in the embedded processor.

- Cold Start: A cold start results when there is no valid Almanac or Ephemeris information available for the satellite constellation in SRAM, or when the time and/or position information is NOT known (i.e. starts at 0 in both cases). Also a cold start will be initiated if an Almanac is valid, but a fix cannot be achieved within 10 minutes of power-up. This could occur if the receiver position has moved significantly since it was last powered-up, but the position change and time are NOT initialized by the user

- Warm Start: A warm start results when there is a valid Almanac, and the initial time and position are known in SRAM, but the ephemeris is NOT valid (i.e. more than 4 - 6 hours old).

- Hot Start: A hot start results when there is a valid Almanac, valid ephemeris (i.e. less than 4 - 6 hours old), and when accurate time and position information are also known in SRAM (position error less than 100km, time error less than 5 minutes).

Software GPS

If the hardware can be implemented in software, the total cost of a GPS can be reduced. Different suppliers have different approaches. One solution is merging the embedded processor to the host processor, so the job is done in the host. In this solution, the basic hardware blocks such as correlators are still kept. Some companies call this approach as accelerated software GPS. The other solution is taking the digitalized signal from IF, and implements the correlators and decoders in software. We can call it as full software GPS. The software GPS is only available in commercial licenses on specific chip in linkable library. Sometime the software GPS license might be more expensive than a low cost IC.

SiRF has acquired the Centrality Atlas, who offers SoC for GPS. Its Atlas is a software GPS product, running on a 300MHz ARM microprocessor and 200MHz DSP. This chip is the best seller in automotive navigator. It offers the comparable performance with SiRF-III, with lower price and media player features.

NXP software also offers Spot GPS software for the host application processors. The Spot GPS Software is a commercial software package in the form of ANSI C. It is easy to be deployed since most of the latest smart phones have a 200MHz, even 500MHz processor inside.

There is also an open source GPS project called GPS world, which uses ATMEGA32 for back-end processing. But this project is not a complete software GPS solution, because it was built upon a hardware correlators IC from Zarlink. However if you are developing the firmware of a GPS receiver, it could be the base for your development. In the reference of open source projects, you can find other software GPS designs.

Modules or DIY

Because GPS is quite sensitive to the environment, inexperienced designs will ruin the whole project. According to the field report, the GPS module has quite high failure rate in production site. Some mobile phone manufacturers tried to design the GPS by themselves, finally they found their GPS phone design is totally a fiasco.

Because GPS is quite sensitive to the environment, inexperienced designs will ruin the whole project. According to the field report, the GPS module has quite high failure rate in production site. Some mobile phone manufacturers tried to design the GPS by themselves, finally they found their GPS phone design is totally a fiasco. The module includes everything and assembly in a can module. The size is as small as a coin. There are many professional GPS module suppliers. LeadTek, Holux and other suppliers are offering SiRF and MTK based solution worldwide.

Application Software

From the point of system software development, the software engineer can consider GPS unit as a standard serial port. No matter which OS is selected, the serial port is always available, either in a real RS232 or simulated serial port on USB and Bluetooth. Any person who has experience of developing UART can develop the GPS application software in NMEA protocol as well.

Finally, the high level application software will combine the map data and coordinates from GPS and present to the users.

There is an open source project called Openmoko, which is sponsored by FIC Taiwan. This project is basically an ARM920T (S3C2440) microprocessor based mobile phone. The GSM/GPRS module and GPS module are connected to the serial ports for ARM920. The phone is working like a desktop PC with GPRS modem and GPS receiver. Anyway, it is a good project which you can start up your own GPS terminals.

There is an open source project called Openmoko, which is sponsored by FIC Taiwan. This project is basically an ARM920T (S3C2440) microprocessor based mobile phone. The GSM/GPRS module and GPS module are connected to the serial ports for ARM920. The phone is working like a desktop PC with GPRS modem and GPS receiver. Anyway, it is a good project which you can start up your own GPS terminals.The connected GPS project Dash Express is a derivated project from Openmoko.

There are also many open source projects available. You always can find the projects in your favorite languages, Java, Python, C++, .NET and even in web programming languages.

Touch Screen

The touch screen technology is widely used in PDA, smart phone, PMP, ATM, information kiosk and many other types of equipment in industrial, medical and commercial environment. Actually the technology enabling these devices is not new, since it was invented by Dr. Samuel C. Hurst in 1971. But it becomes hotter after the release of popular iPhone and iPod touch. With new patents filed for the touch screen technology, Apple brings a new wave to this mature segment and more companies are involved in this revolution with improved interactive UI, ICs, assembly modules and software components.

Conventional Touch Screen

The implementation of a touch screen includes resistive, capacitive, infrared, surface acoustic wave, optical imaging, acoustic pulse, and other emerging technologies. The kit usually is made up of a touch module, and a controller, which measures the touch events in frequency, voltage or current, encodes and transmits to the drivers running in host controller. Different touch screen technologies are suitable for different environments. The selection criteria are:

- - Light Transmission

- - Response Time

- - Touch Accuracy

- - Environment Requirement

- - Lifecycle

- - Surface Hardness

- - Resolution

- - Input Mode (Bare or gloved finger, styles, pen)

- - Display Size (Small, Middle or Large Screen)

- - Multi-touch

Currently most of the portable devices are using resistive touch screen modules, because this technology has perfect balance between low cost and required performance. Both stylus and finger operation are supported. With the resistive touch screen, most of the technical innovations take place on the GUI system in the host. For example, HTC S1 released a new UI called touchFLO. This new technology works efficiently with single-touch screen. For example, the clockwise and counterclockwise finger event on specific screen area will zoom in/out this part of picture or web page. Sometimes it is more convenient because the user just need one hand to hold, and the thumb of same hand to operate. The iPhone requires both hands to operate on-the-go anyway. Yes, even the user can use two fingers of one hand to operate, but he/she should use the other hand to hold it, unless the device can be installed somewhere. Besides, HTC S1 supports handwriting and virtual keyboard, iPhone uses virtual keyboard. Typing European characters on the virtual keyboard might be a good idea, but not for Asian languages. Some other human machine interactivity research organizations also invented another new symbolic operation UI system with single-touch screen. For example, by handwriting an "h" to represent home page, the computer will show up the index for whole system. This method is deployed in an Automotive PC platform (VIA x86 design), it is very effective and attractive. The driver can handwrite on the screen while looking at the road without staring at the screen and touching some buttons. Therefore this UI improved the safety on the road.

I still remember the replacement of B/W LCD on mobile phones. The color LCD is more expensive and wasting more power, the engineers are arguing about if the idea of using color LCD in mobile phone is stupid or not. The sales of mobile phones with B/W LCD is dropping so quickly, now over 90% mobile phones are equipped with color LCD, in bigger size and dual LCD configuration. This story tells us that consumer electronics' product design is marker driven, instead of technology driven. So my conclusion is, even if single-touch screen is fully capable of every operation with some advantages, the multi-touch screen is a major trend of new touch-screen devices, because the consumers love it. Let us check it out and find resources to implement it by ourselves.

If anyone is just trying to clone Apple's design, he will be disappointed. The multi-touch is a system design, not just a small improvement on touch screen itself. Apple's initiative can not be cloned and it is protected by more than 200 patents. I found that Mezu was going to launch its Mini-One on CeBit 2008, but finally it was enforced to be close down on that exhibition because of another MP3 infringement issue. And the Mezu Mini-One does not support multi-touch as well. I am surprised that Apple didn't comment on the design issue for Meizu's MiniOne officially, even they seem like twins. (Please check left picture. ) Ironically Meizu issued a design patent in China. A clone design asks to be protected from being cloned again? I really hope they can use their resource on other proper projects.

Multitouch

Traditional resistive and capacitive can only support one touch hot area, i.e. one time to track one touch event. If two fingers are put on touch screen or touch pad, the result returns to host is the position of the last touch or somewhere between these fingers. In order to setup multiple touch hot areas, the designer must upgrade all of the three parts of the touch screen sub-system. That means innovation should take place on panel input modules, panel controller (ASIC or MCU) and device drivers in host, of course the application running the host should support multi-touch as well. The capability of touch screen controller limits the tracking number of touch events. I am not so sure about the application software support. The application software should track multi-touch events by themselves in some operations, for example, multiple objects should be tracked individually in a multi-user game. In another case, if the multi-touch can be translated into high-level zoom command, or rotate command, the regular software should be able to support multi-touch operation already. In that case, the device driver should support multi-touch event and translate into high-level commands. It is up to system architect to design the whole software stack.

IBM and Logitech have already issued some patents for multi-touch technology. Apple's iPhone is first consumer products deployed multi-touch. So far Apple has filed two patents for multi-touch, one is self-capacitance, and the other is mutual capacitance. These technologies have a new name as projection capacitive. The multi-touch has already deployed in iPhone, iPod, Mac Book Air. The implementation is described on some patent search sites (Please check reference). More competitors like LG, Samsung, and Microsoft are going to release new models and new operation systems with improved touch screen modules and drivers. Even MTK, a well-known supplier for OEM mobile phones, is going to improve their reference design of touch screen and try to mimic the operation of iPhone. Besides projection capacitive touch, there are resistive and surface capacitive implementation for multi-touch.

Input Modules

To allow people to operate with multiple fingers, the iPhone uses a new arrangement of existing technology. It includes a layer of capacitive material, just like many other touch screens. However, the iPhone's capacitors are arranged according to a coordinate system. The coordinate system does not require very high precision, because it is finger based operation. But I have not idea about the detail accuracy of it. Anyway its accuracy can not support handwriting. Its circuitry can sense changes at each point along the grid. In other words, every point on the grid generates its own signal when touched and relays that signal to the iPhone's processor. This allows it to determine the location and movement on the capacitive material. It won't work if you use styles or wear non-conductive gloves.

In mutual capacitance, the capacitive circuitry requires two distinct layers of material. One houses driving lines, which carry current and other houses sensing lines, which detect the current at nodes. Self capacitance uses one layer of individual electrodes connected with capacitance sensing circuitry. Both of these possible setups send touch data as electrical impulses.

After all, Apple's projection capacitive touch screen works like a big grid of keyboards. Each key is driven by row and column pluses, and encoded with its location and address. That working method and programming skills are very common in microcontrollers. The improvement are materials, these keys are almost transparent (Apple calls them transparent electrodes) and on a single film (maybe ITO film or ITO glass) can be produced by advanced process. Because the surface could be glass, so you will find iPhone's glass is quite hard and anti-scratch. According to Unwired View, iPhone also use force-sensing mechanism to filter the touch events by accident.

Actually Apple's iPhone only works dual touch event, it can support more events if Apple improves the capability of the controller, driver and application software.

Because its nature similarity with LCD drivers, some LCD panel manufacturers are integrating these feature into LCD modules. AUO released in-cell multi-touch LCD modules (480*272) on Oct, 2007. More and more LCD with single-/multi-couth screen will be available. I personally think AUO’s approach maybe more competitive because of the overall BOM cost and performance. Neither AUO in-cell and Apple projection capacitive multi-touch screen have large size LCD deployment, because capacitive is very sensitive to EMC noise, while large size HDTV LCD itself is a big noise generator!

Controllers

Because capacitive touch screen is very sensitive with environment, even with the innovation on input module, multi-touch requires more improvement on controller to work properly. Maybe that is another reason why Apple keeps the method back cover on iPhone/iPod?

According to report from howtostuffs.com, the iPhone's processor and software are central to correctly interpreting input from the touch-screen. There are many processors in iPhone, Infineon GSM processor, Samsung ARM11 as the application processor, and a dedicated screen controller BCM5974 from Broadcom. I can not find any information from Broadcom site, I guess it is a custom chip for Apple. So I checked other products, I guess this chip is a DAC as touch screen digitizer. If so, then the DAC is used to sample the grid instead of switching method. It also means ARM11 acts as the processing for multi-touch. The processor uses software to interpret raw data as commands and gestures.

According to report from howtostuffs.com, the iPhone's processor and software are central to correctly interpreting input from the touch-screen. There are many processors in iPhone, Infineon GSM processor, Samsung ARM11 as the application processor, and a dedicated screen controller BCM5974 from Broadcom. I can not find any information from Broadcom site, I guess it is a custom chip for Apple. So I checked other products, I guess this chip is a DAC as touch screen digitizer. If so, then the DAC is used to sample the grid instead of switching method. It also means ARM11 acts as the processing for multi-touch. The processor uses software to interpret raw data as commands and gestures. - Signals travel from the touch screen to the processor as electrical impulses.

- The processor uses software to analyze the data and determine the features of each touch. This includes size, shape and location of the affected area on the screen. If necessary, the processor arranges touches with similar features into groups. If you move your finger, the processor calculates the difference between the starting point and ending point of your touch.

- The processor uses its gesture-interpretation software to determine which gesture you made. It combines your physical movement with information about which application you were using and what the application was doing when you touched the screen.

- The processor relays your instructions to the program in use. If necessary, it also sends commands to the iPhone's screen and other hardware. If the raw data doesn't match any applicable gestures or commands, the iPhone disregards it as an extraneous touch.

There are some other suppliers for capacitive touch technology. The implementation of controller includes ASIC and MCU based. ASIC is very low cost for mass-production, while MCU has the advantage of custom design but its price is higher. The following vendors are delivering touch solutions for markets. Some of them have already supported multi-touch. More vendors are coming to develop new parts to support. Mentioned vendors have their own patents on touch technology.

There are some other suppliers for capacitive touch technology. The implementation of controller includes ASIC and MCU based. ASIC is very low cost for mass-production, while MCU has the advantage of custom design but its price is higher. The following vendors are delivering touch solutions for markets. Some of them have already supported multi-touch. More vendors are coming to develop new parts to support. Mentioned vendors have their own patents on touch technology.Synaptics is a leading worldwide developer of custom-designed user interface solutions for mobile computing, communications and entertainment devices. Synaptics is focusing on touch pad solutions, and offer ASIC with I2C/SMBus.

Taiwan ELAN Microelectoncis Company (EMC) also a patent with trademark of eFinger. It claims that patent is competitive on touch screen application. But it requires registration on specification and has an open lawsuit with Synaptics.

Cypress offers CapSense in many applications. For example, the keypad part of V3/V8 from Motorola, LG chocolate mobile phones. Cypress CapSense is based upon its PSoC mixed signal array with embedded RISC M8 microcontroller. It is very easy to use. The new PSoC CapSense touch screen solution also offers designers the ability to implement multiple additional functions beyond touch screens. The same devices can implement capacitive buttons and sliders simultaneously, replacing their mechanical counterparts, as well as proximity sensing. Engineers can also take advantage of the PSoC mixed signal array to implement functions beyond CapSense. Such functions include, driving LEDs, backlight control, motor control, power management, I/O expansion, accelerometers and ambient light sensors. These functions, in conjunction with flexible communication (I2C and SPI), allow for unparalleled system integration. Cypress's CapSense touch screen solution is available using projected capacitance and surface capacitance sensing techniques.

Leadis is dedicated to creating compelling touch solutions focused on a strategy of Innovation and Integration. Leadis' line of PureTouch (TM) capacitive touch controller solutions will begin sampling in the first half of 2008.

ATLab is a Korean company, co-operates with ST to offer touch screen touch ICs for capacitive technology.

Quantum Research Group, offers QProx(TM) touch control and sensor ICs. As same as Cypress, its solution offers more features for QSlide and QWheel. Atmel acquired Quantum already.

Thanks to Apple, more and more players are trying to compete on this market.

Other Suppliers

ST has two lines for touch pads, one is licensed from Quantum (MCU based), the other is licensed from ATLab (ASIC based). TI is trying to promote its MSP430 in this market. NXP also has its companion chip for PDA with touch screen DAC inside. Some other vendors such as Microchip and Maxim have similar product lines.

Driver and Application Software

I am not quite sure about how Apple implement these, I would rather to use software to refer to both driver and application software part. Apple also filed a complementary patent called "multi-finger gesture" for presentation of the finger movement. But that part requires more know-how on system software, I am not going to discuss here. Anyone who is interested in please visit some professional sites of MIT or NYU.

Latest Development for Alternative UI

Besides multi-touch, some more topics regarding touch screen is rising on horizontal. They are photo sensor in pixel, polymer waveguide, distributed light, strain gauge, dual-force touch, laser-point activated touch and 3D touch.

Patent Issues

Most the multi-touch related patents are held in 3M, Nitto Denko, Oike-Kogyo, Dupont, Apple, IBM for materials and panels, but more Taiwanese, Korean industries are trying to file their own patents on the touch panels. As a system developer, we just make sure our products will not infringe these patents. It is tricky for those mobile phones manufacturers who are trying to clone Apple design on their own products.

Hacking a Network Attached Storage (NAS)

I try to find some candidate hardware platforms and Linux distributions in this article, so the fans can build their own Network Attached Storage (NAS) or expand their NAS with more features by hacking an existing NAS (Network Attached Storage).

What is NAS?

NAS is the abbreviation of Network-attached Storage. It was introduced by Novel to offer the network file sharing service. Network attached Storage (NAS) was designed for enterprise applications, so it supports UNIX from the very beginning. The consumers realized they need more storage capability for the digital media files from Internet. A consumer NAS (Network-attached Storage) can share the media files with all the PCs and the digital media players. Furthermore, NAS can download the files from BitTorrent or eDonkey in a non-PC environment. The latest consumer NAS transforms into a full-functional media server with variety features.

Let us check out the long list:

- File sharing for Windows, Linux and Mac via Samba, NFS, HTTP, FTP and rsync;

- Easy data backup capability for flash cards, USB stick and removable HDD, with optional RAID support;

- A print server;

- A Media server for Windows MCE, Xbox360 and PS3 with UPnP/DLNA;

- A Web server with DDNS, PHP, ASP, SQLite and MySQL;

- An FTP server;

- An iTunes server;

- A 24 hour download server supports BT, eDonkey and FTP;

- A home video surveillance server, which supports both IP camera and USB camera;

- Multiple administration panel choices in Web GUI, virtual console and custom terminal software;

- Much more …

Why Do We Need NAS (Network Attached Storage)

Although we can use the more powerful PCs in many applications, we still require embedded computers as NAS for security, power consumption, and network.

Security

A well-designed NAS can offer more security than a regular PC. Most of the NAS OSes are based upon Linux OS, so they have less virus problems compare to Windows. Even if they have downloaded the files infected by some viruses, the embedded OS in the NAS will not be infected by the viruses. Of course, you still have to scan the files with the anti-virus software.

A dual slot NAS usually offers RAID backup service. So the important data can be restored if one disk has a malfunction.

Power Consumption

A PC is not designed for working 7*24 hour. It can work for a long time. But I will not leave my PC to download a huge file without knowing when it can be ended. A NAS can work in more energy effective ways. If there is no active connection, the NAS can shut down the hard disk drivers and wait for the connections. The power consumption of a regular NAS depends on its hard disk drivers, which is about 5W~20W. A NAS can save our money and environment with less carbon emission.

Network Access